A search engine is a searchable program that accesses the Internet automatically and frequently and stores the title, keywords and, in part, even the content of the web pages in a database.

When a user dials a search engine to find information, a phrase or a word, the search engine will look into this database and, depending on certain priority criteria, will create and display a list of hit-list results.

The way search engines work is based on the following methods:

1. Crawling

2. Indexing

3. Searching

In order to create the database for a search engine, a search robot (boot, spider) is required. This is a software program developed in a language that can be Perl, Ruby, Java, PHP.

Useful links are extracted by specific methods in a database.

Search tools use the following methods of retrieving information: searching for keywords or phrases, Boolean mechanisms, proximity, truncation. We can locate a resource using their specific URL (universal resource locator), this method usually being useful and fast, but there is a possibility that the URL of the resource has changed due to the dynamic nature of the Internet.

A search engine returns information from the HTML link. A web-based database is a copy of each page listed, practically small parts of that page, such as the title, header, the content.

By creating a list of page surrogates, we index every web database, but their performance varies from one page to another.

Web crawling can be defined as the process of efficiently collecting a large volume of web pages simultaneously with the structure of page links.

Web crawling transfers information from web pages to the database to index them by content. The goal is to save quickly and efficiently the data of the web pages we are accessing.

The crawler is the component of a search engine that collects pages from the web to index them. Spider is an alternative name for a web crawler.

The main features of a Crawler are:

1. Toughness: Recognize and avoid crawl traps, spider traps.

2. Politeness: Web servers have implicit and explicit rules that specify how frequently they can be visited. These principles must be complied with by crawlers.

3. The ability to be executed in a distributed way on several machines.

4. To have a scalable architecture that tolerates the extension of new machines.

5. Efficient use of system resources: processor, memory, network capacity.

6. To give priority to web pages that provide quality responses to users questions.

7. Operate continuously by requesting new copies of pages previously collected. The collection frequency must be approximately equal to the page change frequency.

8. Be extensible, to be able to operate with new formats, new protocols, with a modular crawler architecture.

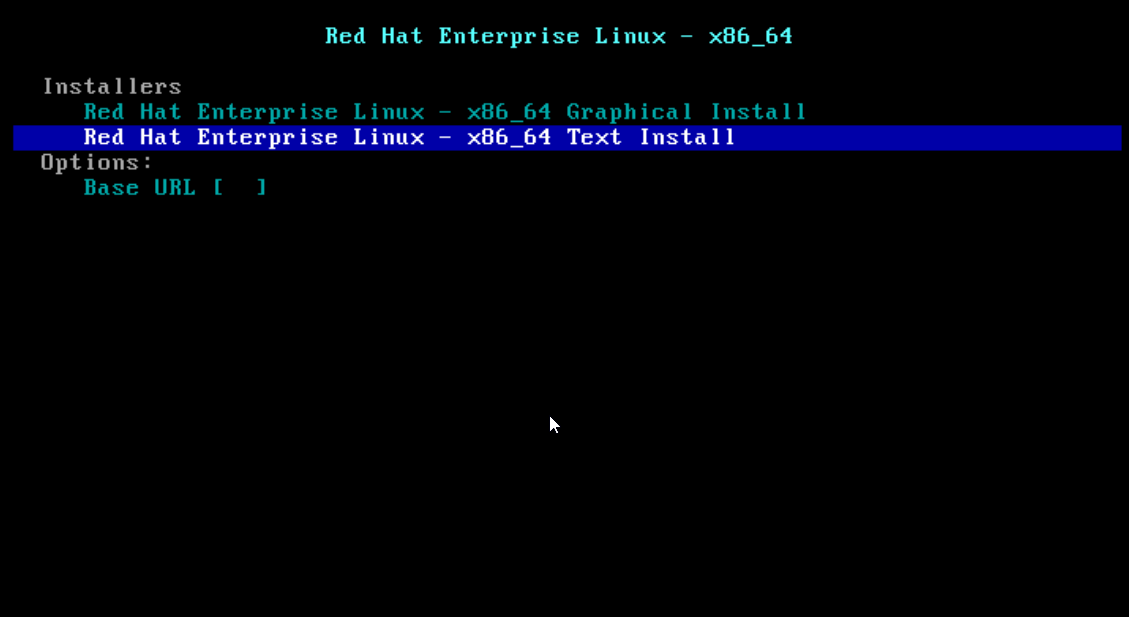

How a crawler works:

1. Start from an initial S set of URLs, called seed set.

2. Set F = S; F is called the crawler URL boundary.

3. Select a URL and collect the corresponding webpage P.

The functional components of a crawler

Modular Structure:

1. URL Border: Contains the URLs of the requested pages to be collected by the crawler.

2. DNS resolution mode causes the web server to download the webpage specified by a URL.

3.Fetch: a module that uses http to download a webpage from a URL.

4. Parse: how to extract text and links from collected web pages.